🔥 We have released the inference script, Gradio-based web demo, and model checkpoints on Hugging Face and ModelScope. Check out the code on GitHub!

🔍 We are recruiting Ph.D. students and interns interested in world model research. If you are passionate, please contact: Lue Fan (lue.fan@ia.ac.cn)

We are excited to see that Waymo World Model shares similar vision with NeoVerse. NeoVerse enables generating multi-view videos from dashcam video and diverse counterfactual scenarios with long-tail objects.

Reference Dashcam Video

Starting from a single front-view video, NeoVerse can generate multi-view consistent videos.

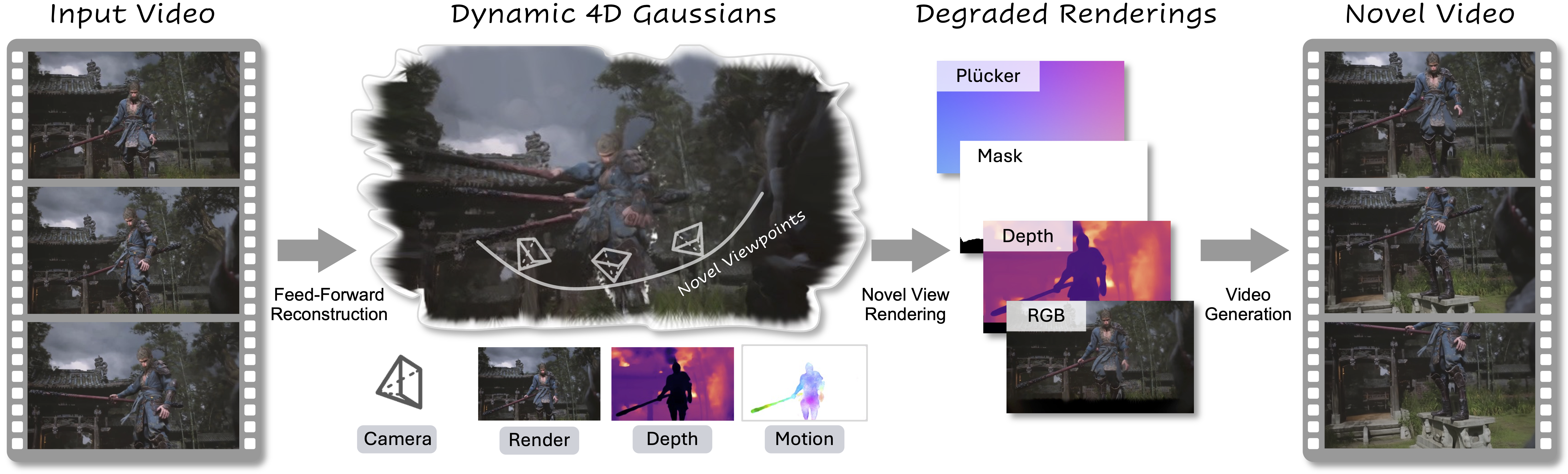

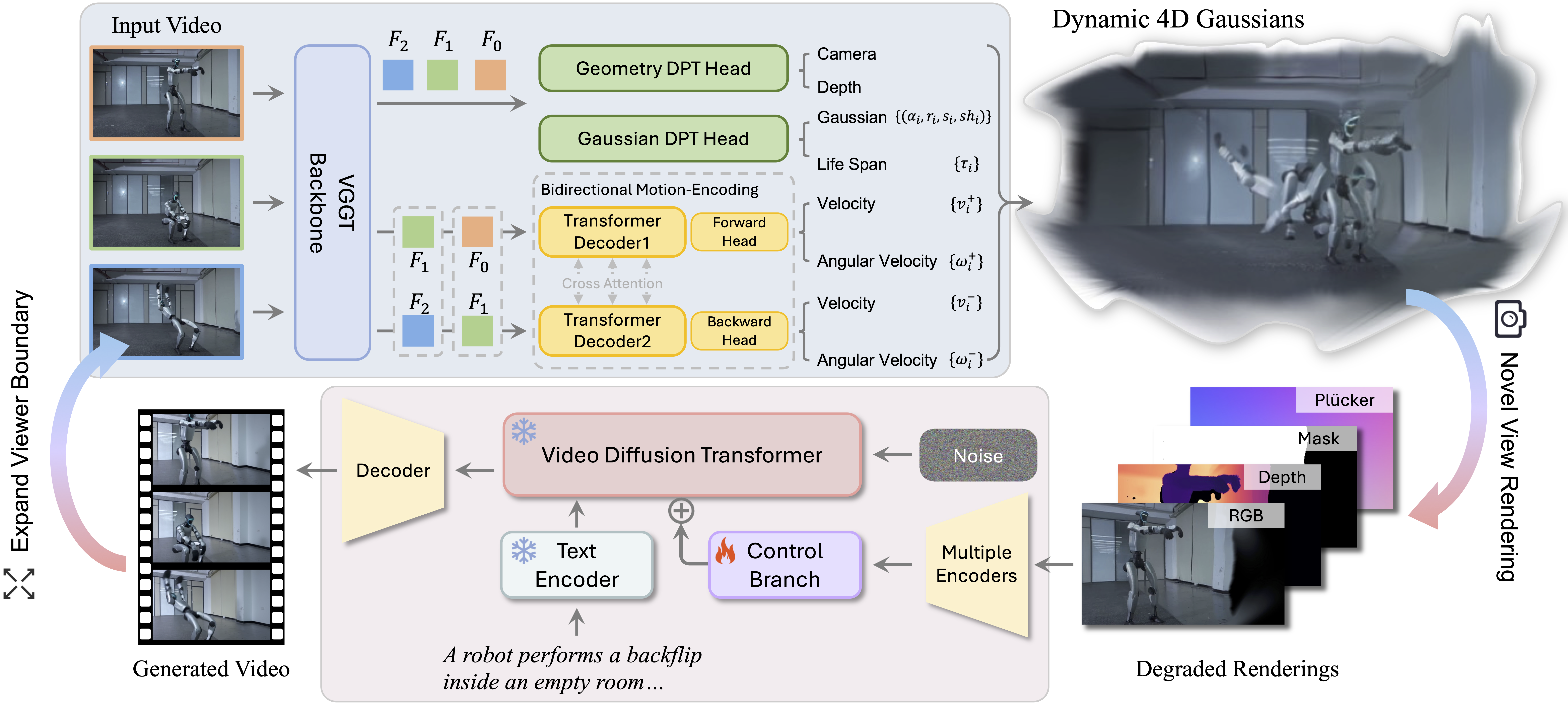

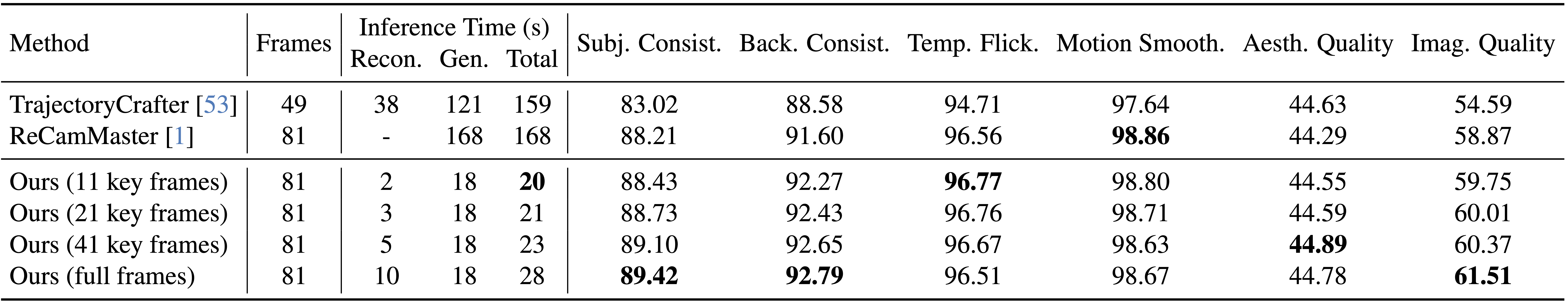

In this paper, we propose NeoVerse, a versatile 4D world model that is capable of 4D reconstruction, novel-trajectory video generation, and rich downstream applications. We first identify a common limitation of scalability in current 4D world modeling methods, caused either by expensive and specialized multi-view 4D data or by cumbersome training pre-processing. In contrast, our NeoVerse is built upon a core philosophy that makes the full pipeline scalable to diverse in-the-wild monocular videos. Specifically, NeoVerse features pose-free feed-forward 4D reconstruction, online monocular degradation pattern simulation, and other well-aligned techniques. These designs empower NeoVerse with versatility and generalization to various domains. Meanwhile, NeoVerse achieves state-of-the-art performance in standard reconstruction and generation benchmarks.

Framework of NeoVerse. In the reconstruction part, we propose a pose-free feed-forward 4DGS reconstruction model with bidirectional motion modeling. The degraded renderings in novel viewpoints from 4DGS are input to the generation model as conditions. During training, the degraded rendering conditions are simulated from monocular videos, and the original videos themselves serve as targets.

NeoVerse is accessible to powerful distillation LoRAs, enabling a fast inference speed less than 30 seconds. The runtime evaluation is conducted on a single A800 GPU.

@article{yang2026neoverse,

author = {Yang, Yuxue and Fan, Lue and Shi, Ziqi and Peng, Junran and Wang, Feng and Zhang, Zhaoxiang},

title = {NeoVerse: Enhancing 4D World Model with in-the-wild Monocular Videos},

journal = {arXiv preprint arXiv:2601.00393},

year = {2026},

}